Oakminer AI

Product: AI augmented insights platform

Role: Head of Design

Scope: Web platform, AI chat.

oakie.ai

An early-stage startup revolutionizing the way organizations leverage the expertise of their most valuable employees.

Experts use OakminerAI to collaborate with an LLM on domain-specific workflows. Through this iterative process, workflows are captured and can be used to train junior team members.

Problem space & user needs.

Organizations with high-stakes, document-heavy work rely on a small number of experts who have the experience and know‑how to interpret complex data and turn it into actionable insights.

Expertise doesn’t scale:

Experts are expensive and time-constrained

Novices can access information, but not expert judgment

Training is inconsistent and difficult to standardize

GOAL: design an experience where users can use AI with trust building guard-rails, especially when the content and outcomes matter.

Users and use-cases

Novice employees - Need fast access to expert-level judgment and reusable thinking patterns—because direct training time is limited.

Expert managers (primary users for phase 1) -

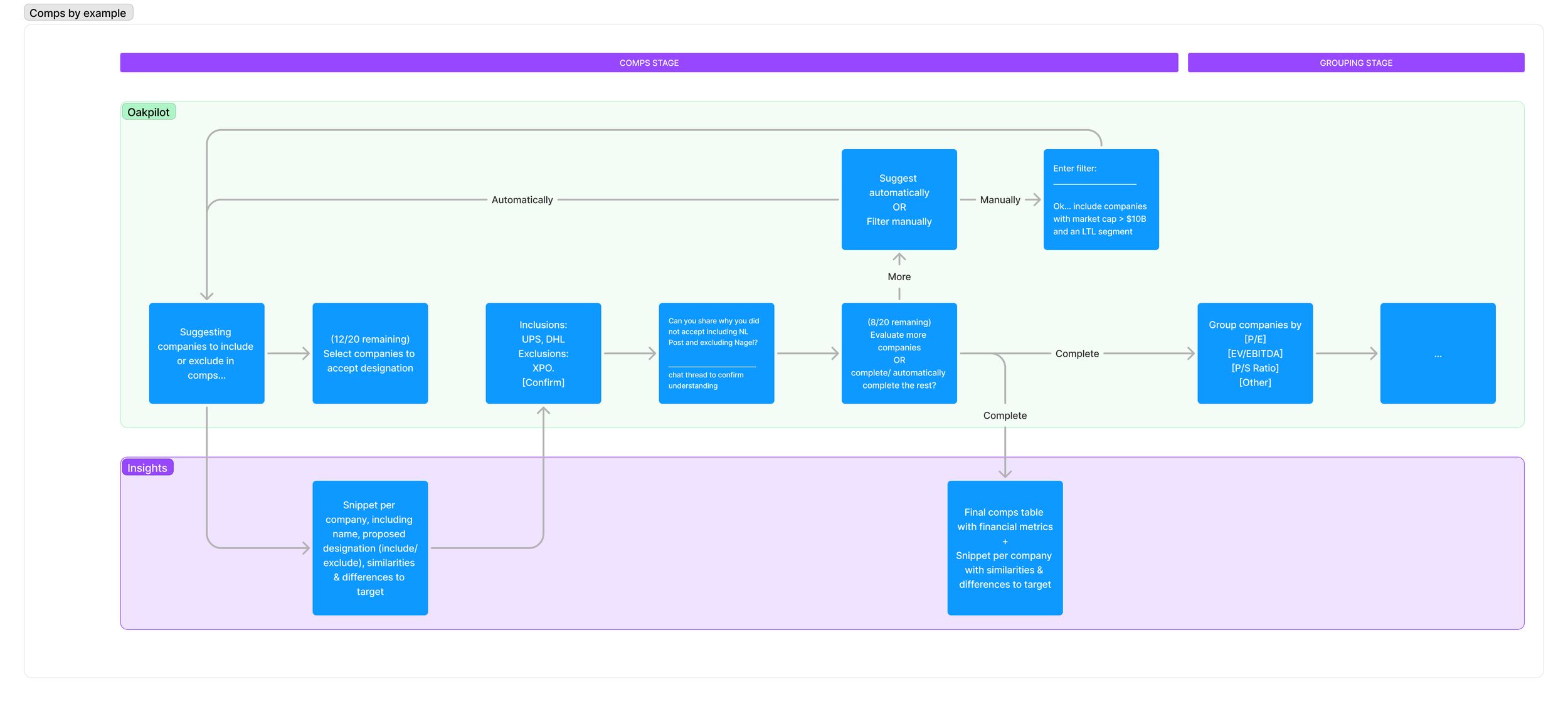

On one hand, the were willing to build the process along with the AI and had the ability to provide it with directions and spot hallucinations. The support they needed from the tool was streamlined flows, automation of repetitive steps and identification of sources and reasoning.

Close collaboration with our prospective users was essential to understand their complex workflows.

Collaborating with users/partners

As an early-stage product, we faced tight timelines, shifting requirements, and the challenge of earning user trust in complex, high-stakes decisions.

Preliminary research

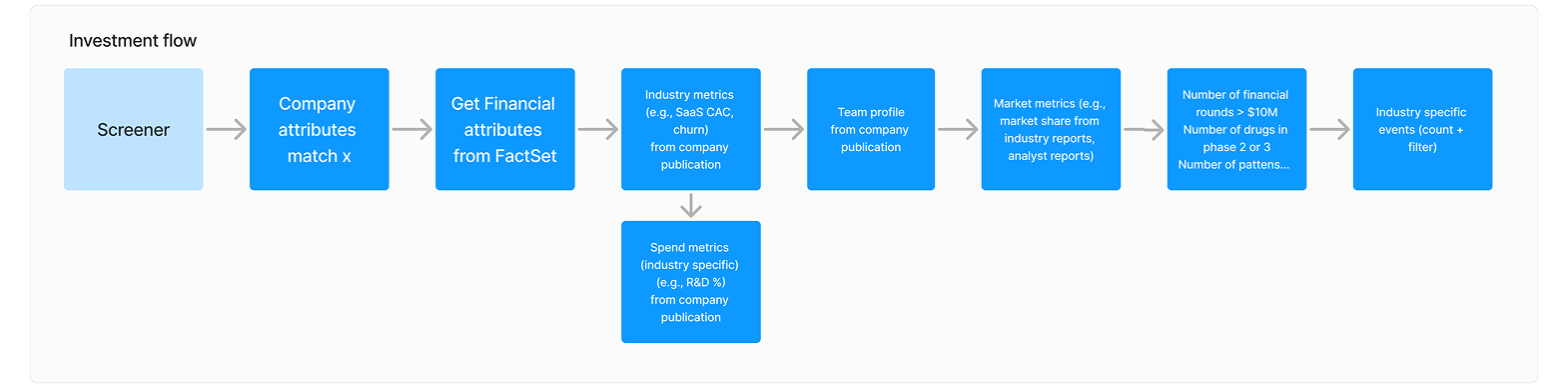

I combined interviews, workflow mapping in FigJam, shared documentation, and hands-on testing with LLM tools to understand:

Expert’s workflows and how they verify and trust data

How knowledge is currently being transferrer between hierarchies.

How our prospective users engage with conversational AI, their expectations and the practical and usability risks involved.

User workflow example

Competitive research and technical practice

To ground design decisions, I studied both the market and the technology. I analyzed interaction patterns and usability risks across major LLM and chat products and AI‑enabled tools like Notion, Adobe, and Slack bots. In parallel, I ran hands‑on tests with GPT workflows, datasets, and our own prototypes to see where models perform well, where they fail, and how UX can compensate for latency, ambiguity, and hallucinations.

I summarized some of my usability research findings in a Medium text:

Contemporary usability challenges for Chat & LLM‑powered applications.

Wireframes & Mockups

Throughout the process, I have used the learnings from interviews and research to evolve user flows and scripts into wireframes.

The styling of the wireframes suited the audience and the purpose of the discussion. For example, developers would sometimes get overwhelmed by too much details, but, investors wanted to see a market-ready experience with high level of details.

Our day-to-day relied on the notion that mockups turn vague ideas into actionable goals.

Wireframes and mockups

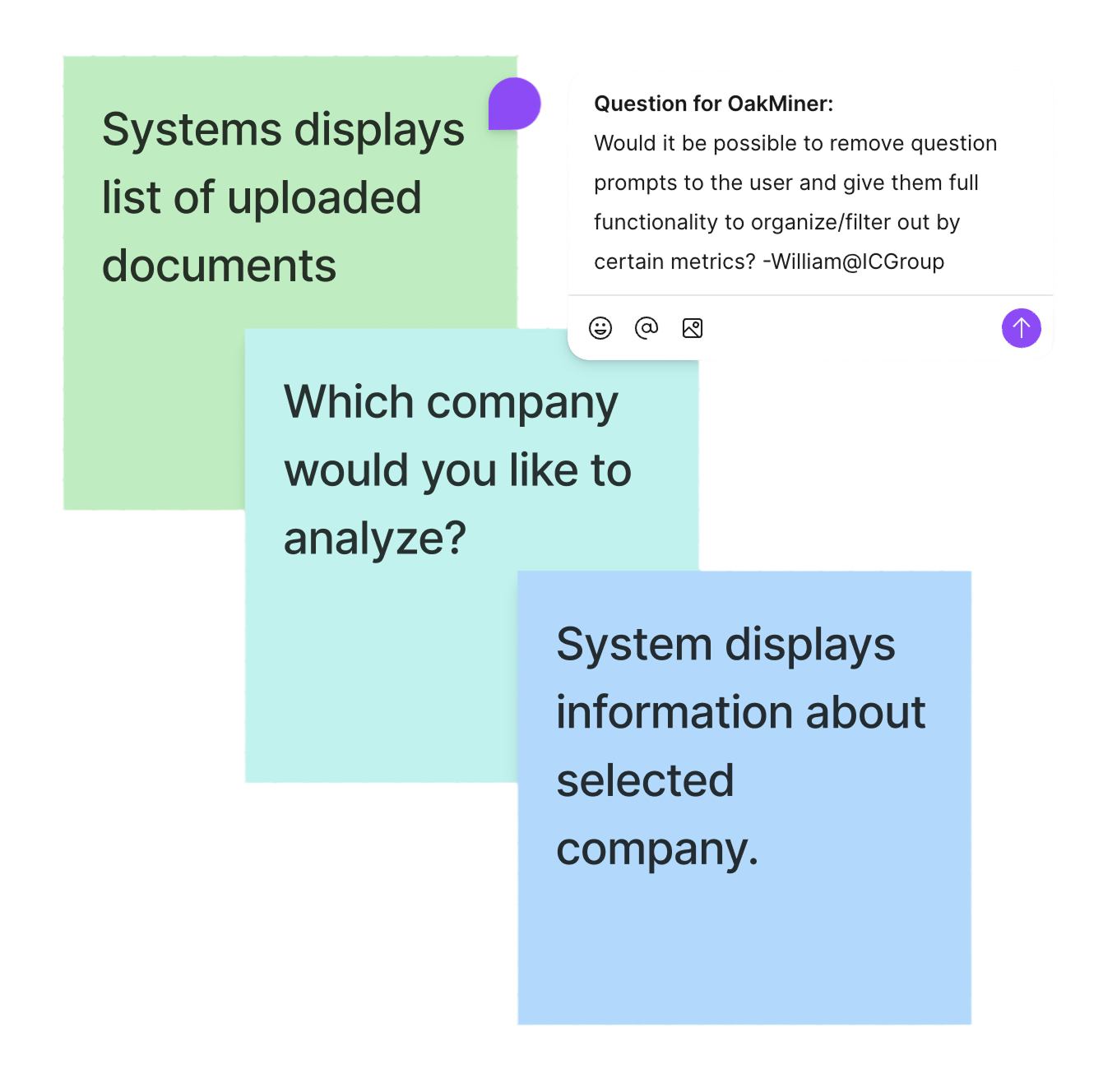

Interaction workflow example

So, why did we use a conversational interface?

Currently, LLMs primarily use a chat interface for user interaction. Exploring the possibilities of LLM UI opens up many considerations and expectations, both for users and the product team. Our observations suggested that we could use chat as a primary mode of interaction, but we must preserves some principles in order to mitigate potential usability challenges.

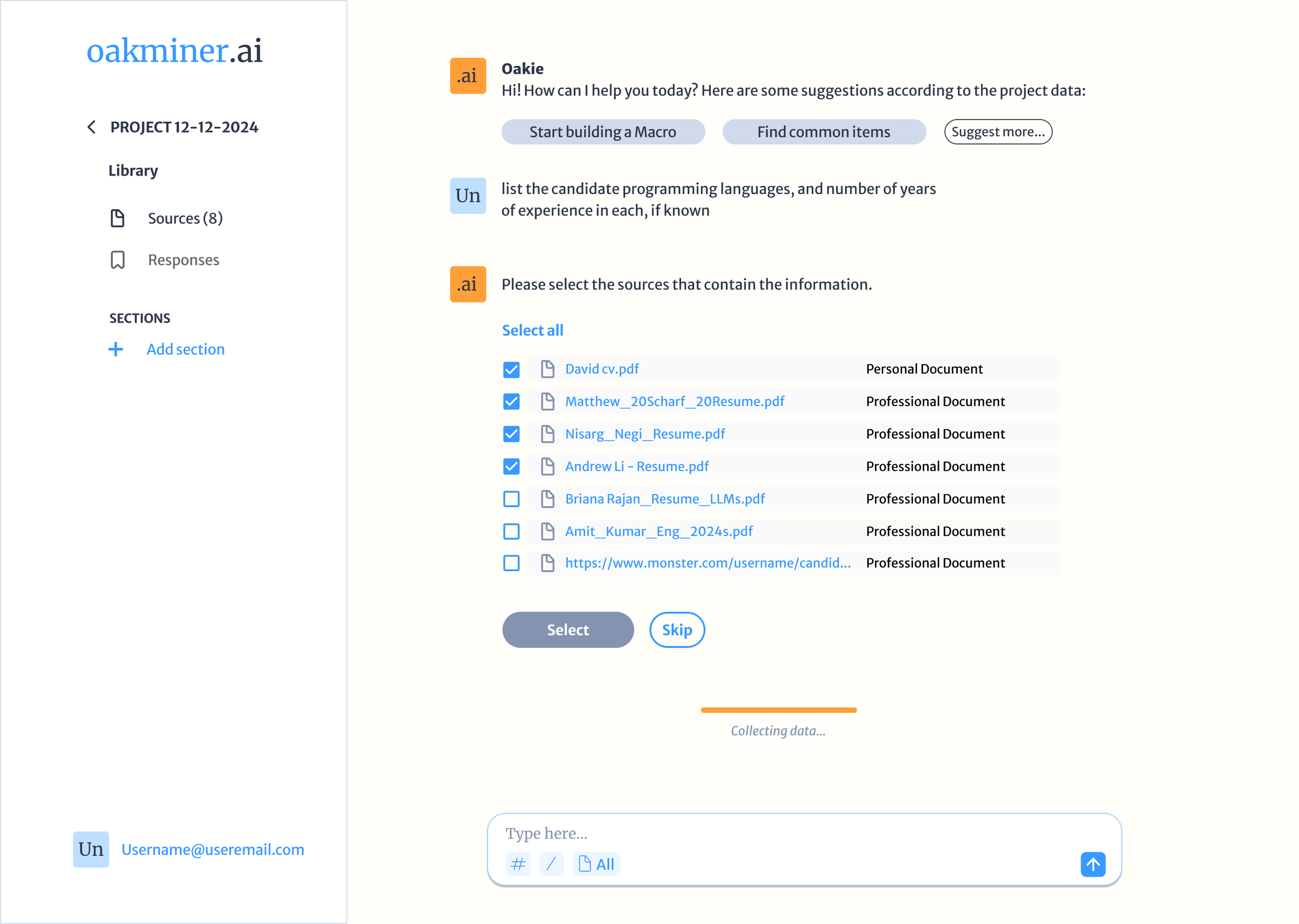

Designing Conversational UX

Conversational UX design focuses on creating intuitive and engaging dialogues between humans and machines. It combines UX principles, linguistics, and AI technologies to generate interactions that feel humane and natural.

Design principles for trust and usable AI

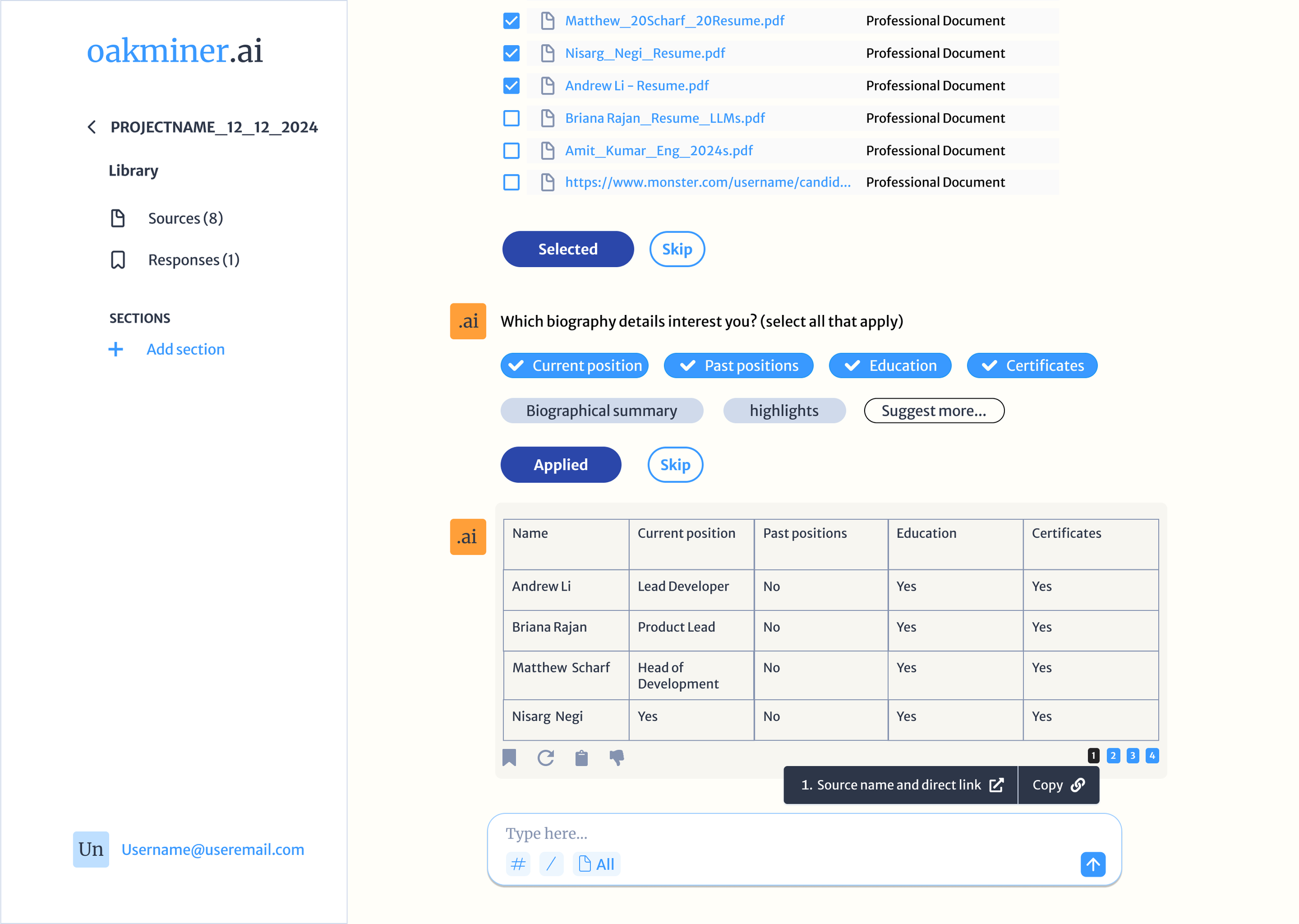

Clarity & Confidence

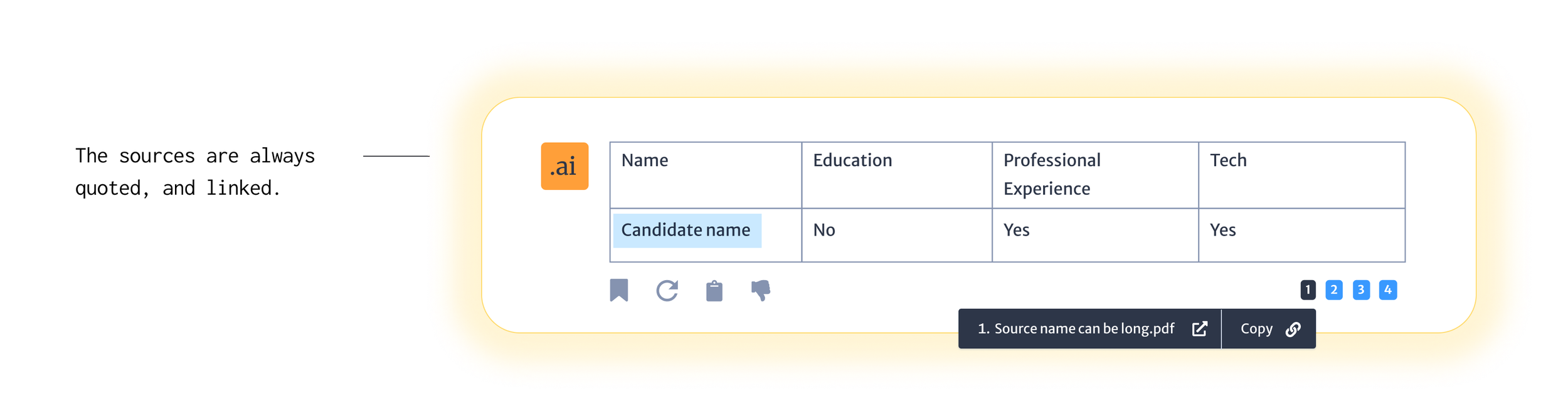

LLMs are a new technology, and so, it lacks the credibility of older, more seasoned platforms. To gain the trust of our users, we developed a few strategies to help users test and verify the results.

Guidance & Context

We wanted the UX be self-explanatory, and have the LLM push for progress and engagement. This also helps the system to learn more about the user’s preferences.

Persona & Tone of Voice

We had to balance between the AI’s roles as both a student and a teacher, depending on the user. We framed the agent’s tone as Friendly, Helpful and Trustworthy.

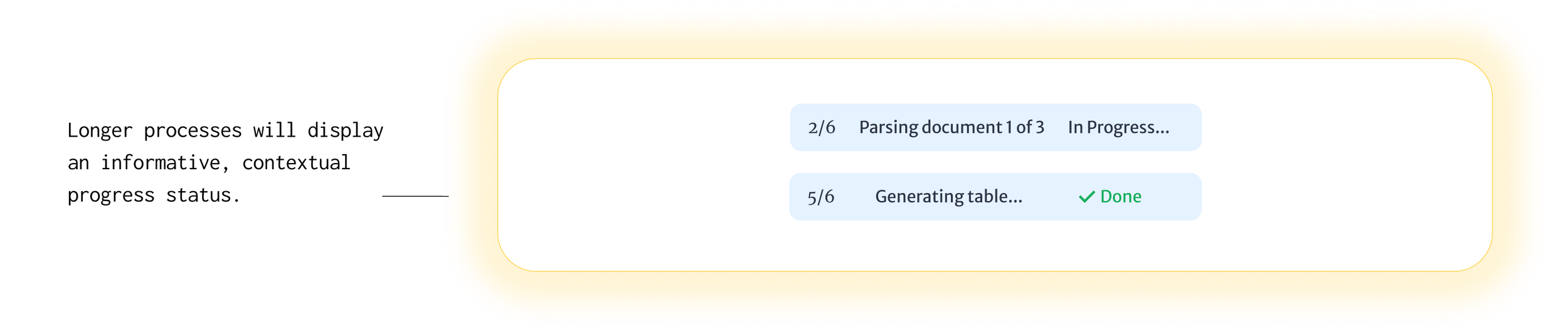

Fallback Strategies

We needed to create flows that addressed potential technology challenges. Issues like lag, hallucinations, and inaccuracies. Alerts should engage the users and keep them in the loop.

Similarly, the Save, Re-generate, Copy, and Feedback tools serve a dual purpose: they enhance user functionality and, with tracking, allow the product team to assess the AI's performance and develop changes.

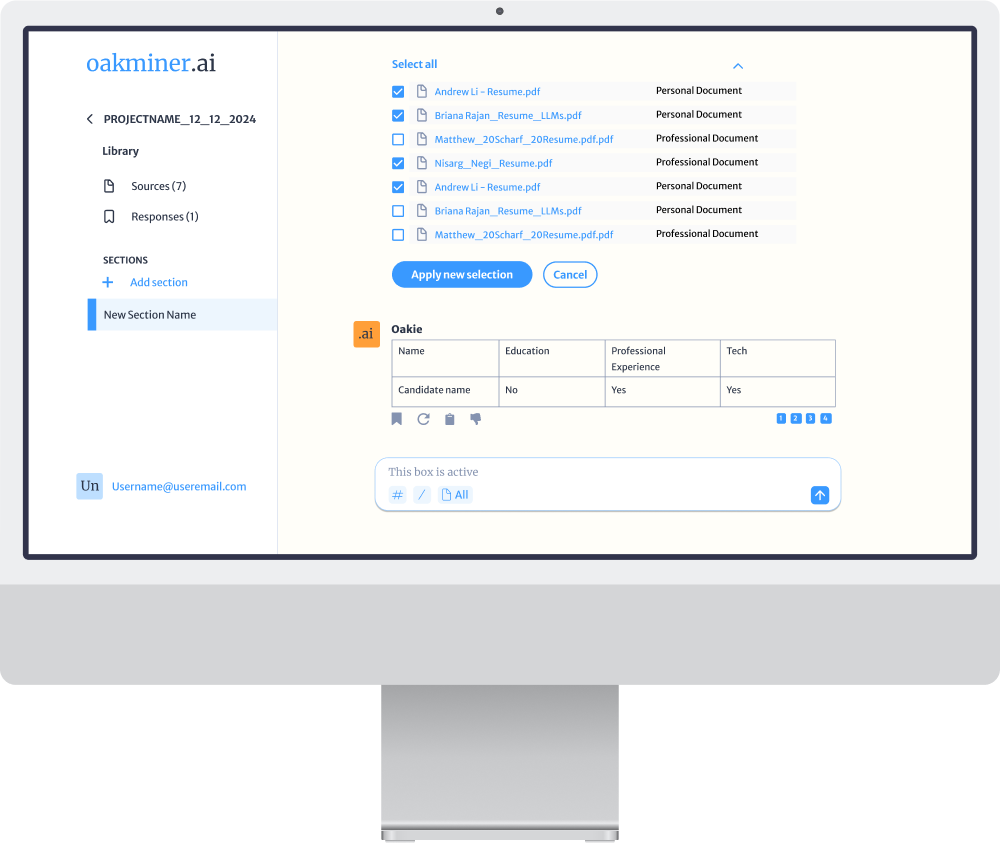

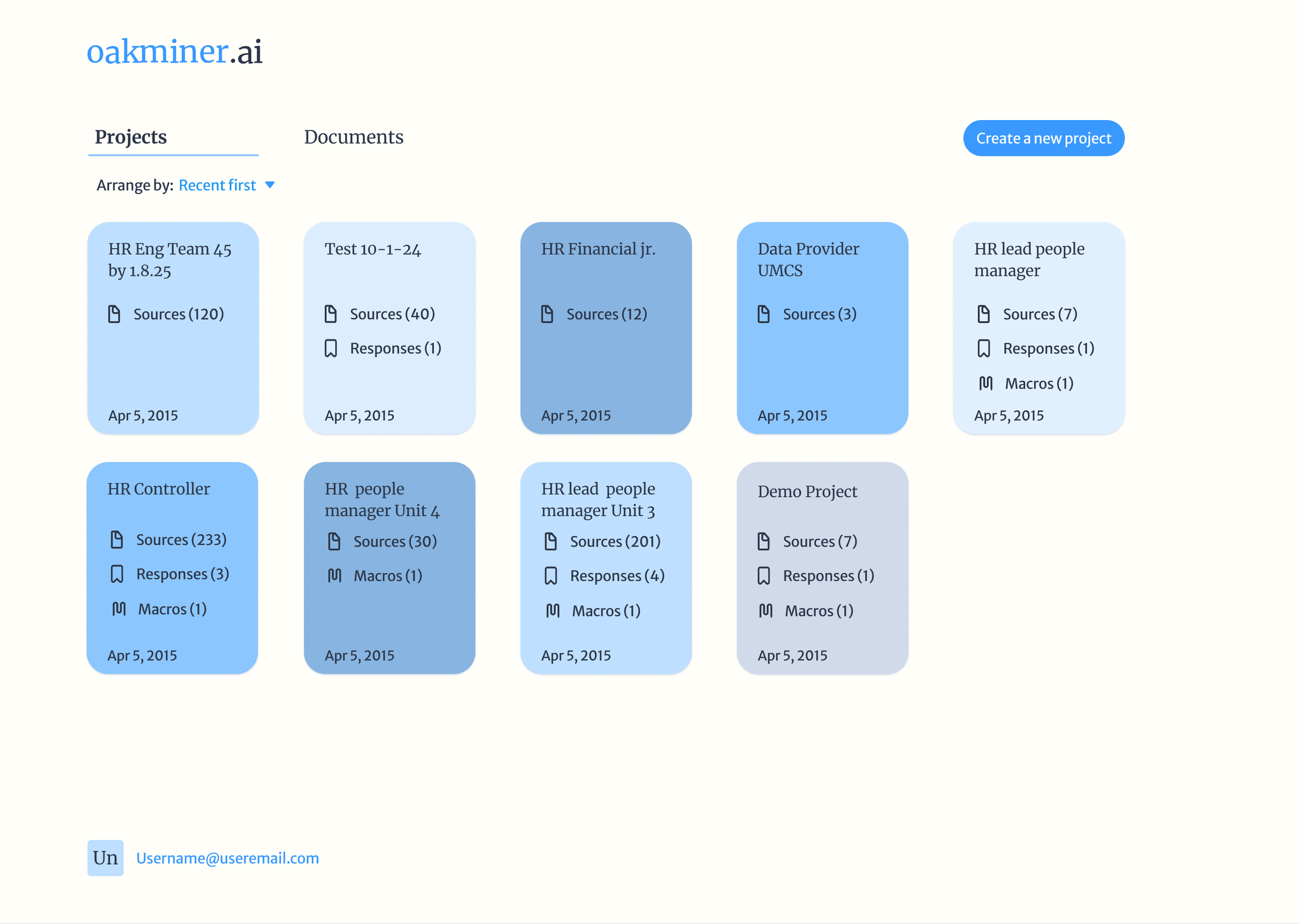

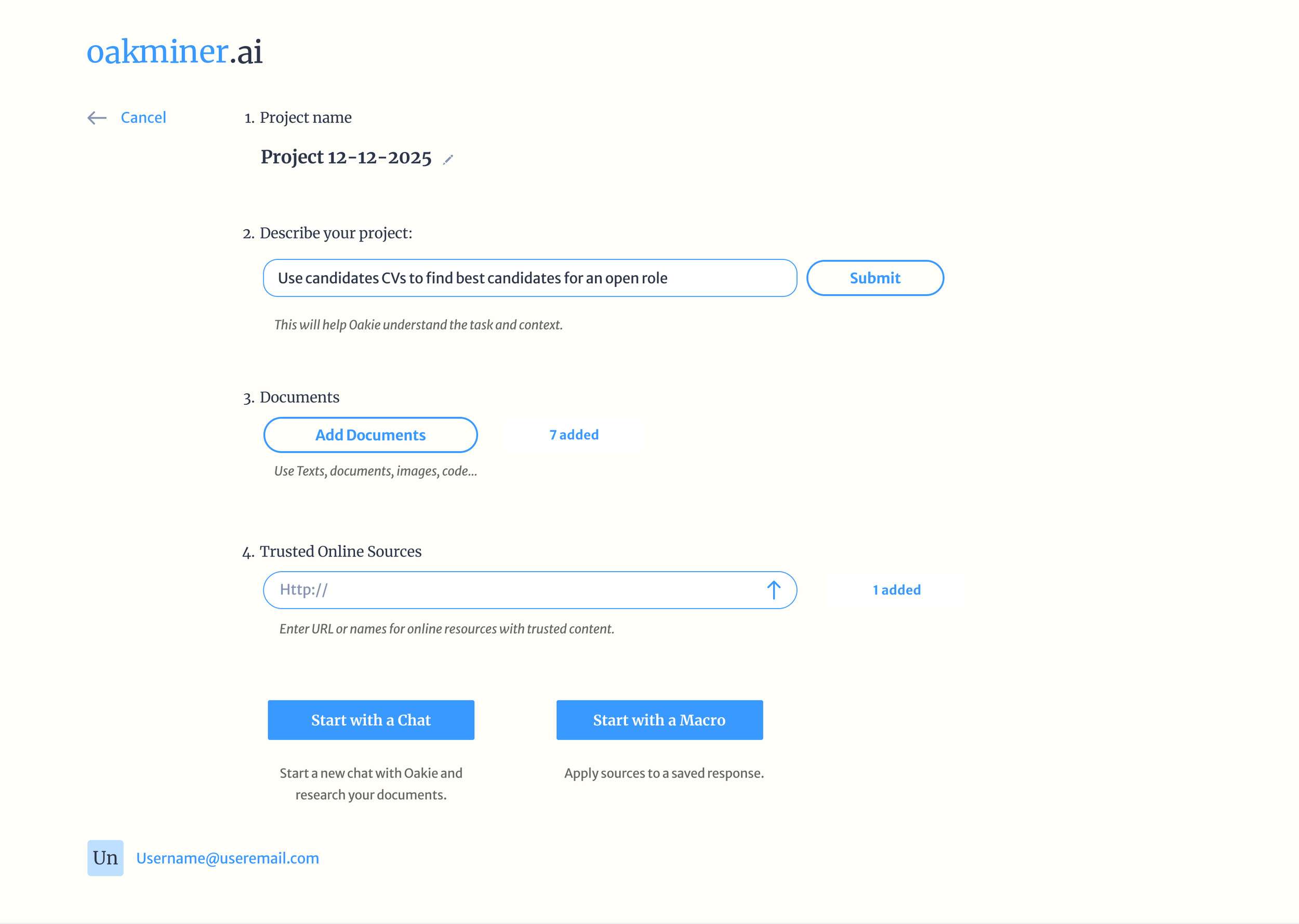

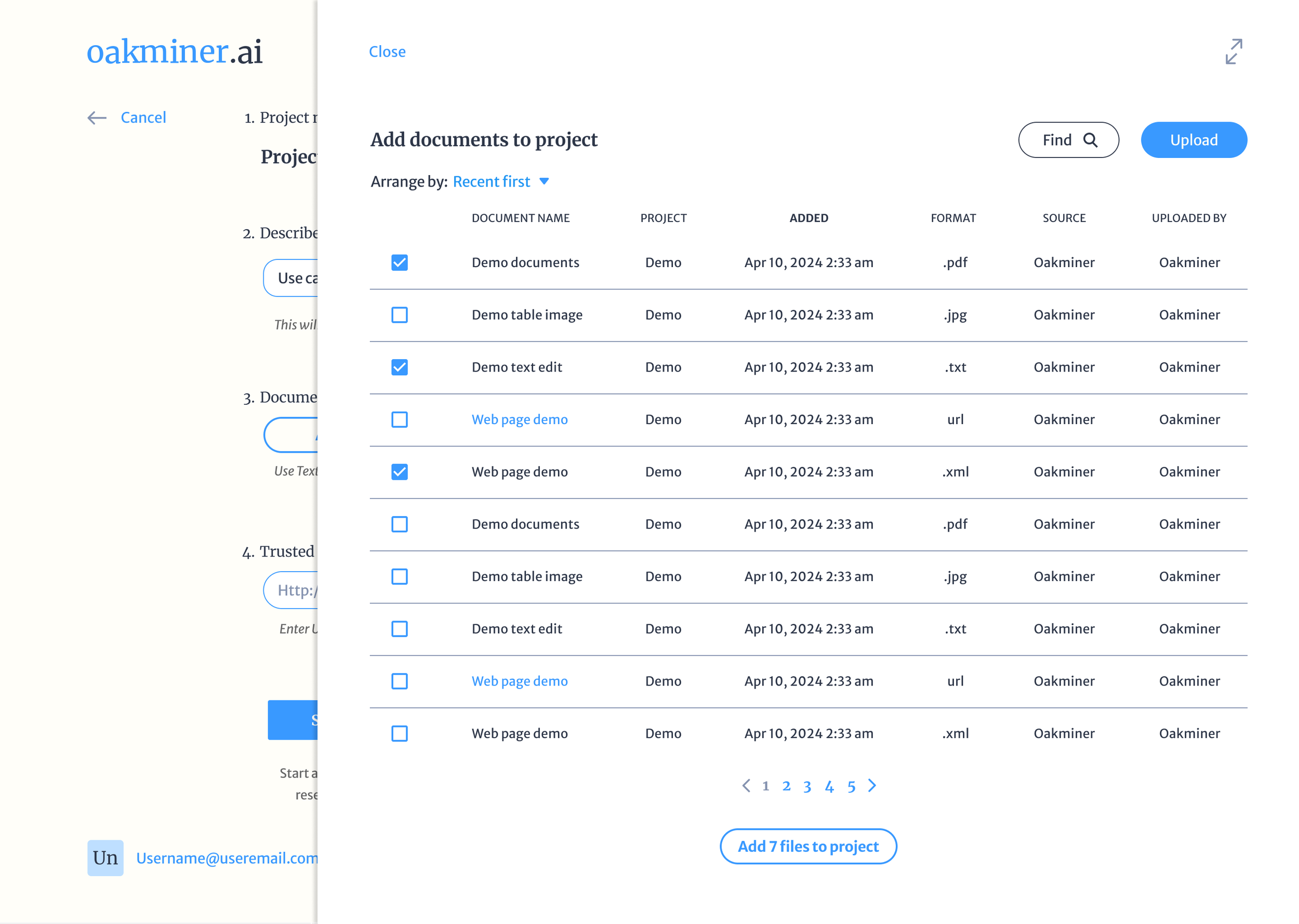

Project setup and document ingestion

A conversation that stays grounded in sources

A library that preserves work and enables reuse

Putting it all together

Setting out to connect design decisions and principles into end-to-end workflows.

Design system & components library

Working with components made my work much easier. Setting up a style library based on Atomic Design concepts and evolving it to create increasingly complex components helped the engineers feel grounded when developing frontend. Not all states made it in to earlier iterations, but the growing library helped the developers and myself move quickly, test and validate our product.

Impact

0—1

In the course of 6 months, we built the live, front-end experience of Oakie AI.

Real Users

Once our closed beta was complete, many of our advisors became clients.

Acceleration

Flexible design system enabled us to iterate and scale the product quickly.